How We Migrated from AWS S3 to MinIO, Saving $1.2K/Year Without Downtime

Step-by-step AWS S3 to MinIO migration: Save $1.2K/year with self-hosted object storage. Docker, NGINX, zero-downtime strategy for developers.

Facing the Cloud Cost Crisis

When new efficiency policies demanded a 20% reduction in our cloud spend, I knew we needed a radical solution. Our AWS S3 storage—housing 5TB of critical data—had become a significant cost center. After evaluating alternatives, we chose MinIO: an open-source, S3-compatible object storage solution that promised both cost savings and greater control.

The challenge was monumental: migrate terabytes of data while maintaining 40+ production endpoints with zero downtime.

Why MinIO Won Our Infrastructure Vote

MinIO emerged as the clear winner for several reasons. Its S3-compatibility meant our existing applications could transition with minimal code changes. Being open-source eliminated vendor lock-in and recurring fees. Most importantly, self-hosting gave us complete data sovereignty and control over our storage environment.

The business case was undeniable: $100/month in direct savings, totaling $1,200 annually, plus enhanced security and flexibility.

The Migration Blueprint: Breaking Down Complexity

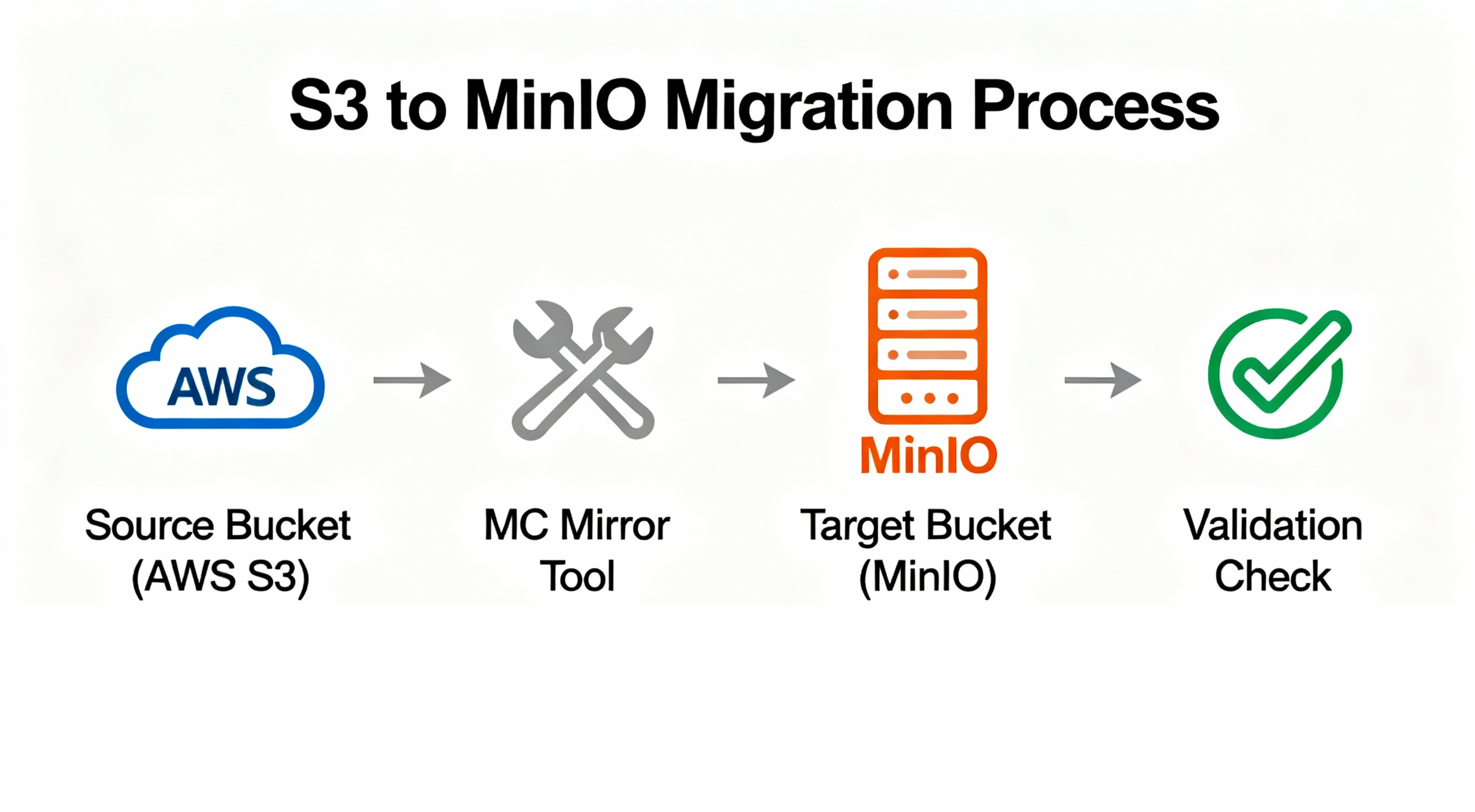

Tackling this mammoth project required strategic decomposition into manageable phases:

1. Infrastructure Setup - Deploying MinIO with Docker and configuring NGINX as a reverse proxy

2. Data Migration Pipeline - Using MinIO's mc tool for seamless S3→MinIO transfer

3. Codebase Refactoring - Updating our Python services from boto3 to MinIO SDK

4. Comprehensive Testing - Validating all storage-dependent endpoints

Docker & NGINX: The Foundation of Our Success

We containerized MinIO using Docker to ensure environment consistency from development to production. This eliminated the classic "works on my machine" problem and allowed us to spawn test instances in seconds.

NGINX served as our reverse proxy, providing a single entry point (https://storage.example.com) with SSL termination, rate limiting, and path rewriting. This abstraction layer proved crucial—it meant we could migrate without any client-side changes.

The Actual Migration: `mc` Magic and Code Refactoring

MinIO's mc (MinIO Client) tool was our migration workhorse. With simple commands, we mirrored our S3 buckets to MinIO while maintaining object metadata and structure.

# Configure aliases for S3 and MinIO

mc alias set s3 https://s3.amazonaws.com AWS_ACCESS_KEY AWS_SECRET_KEY

mc alias set minio http://minio.example.com MINIO_ACCESS_KEY MINIO_SECRET_KEY

# Mirror the buckets

mc mirror s3/bucket-name minio/bucket-nameOn the code side, we refactored our Python services:

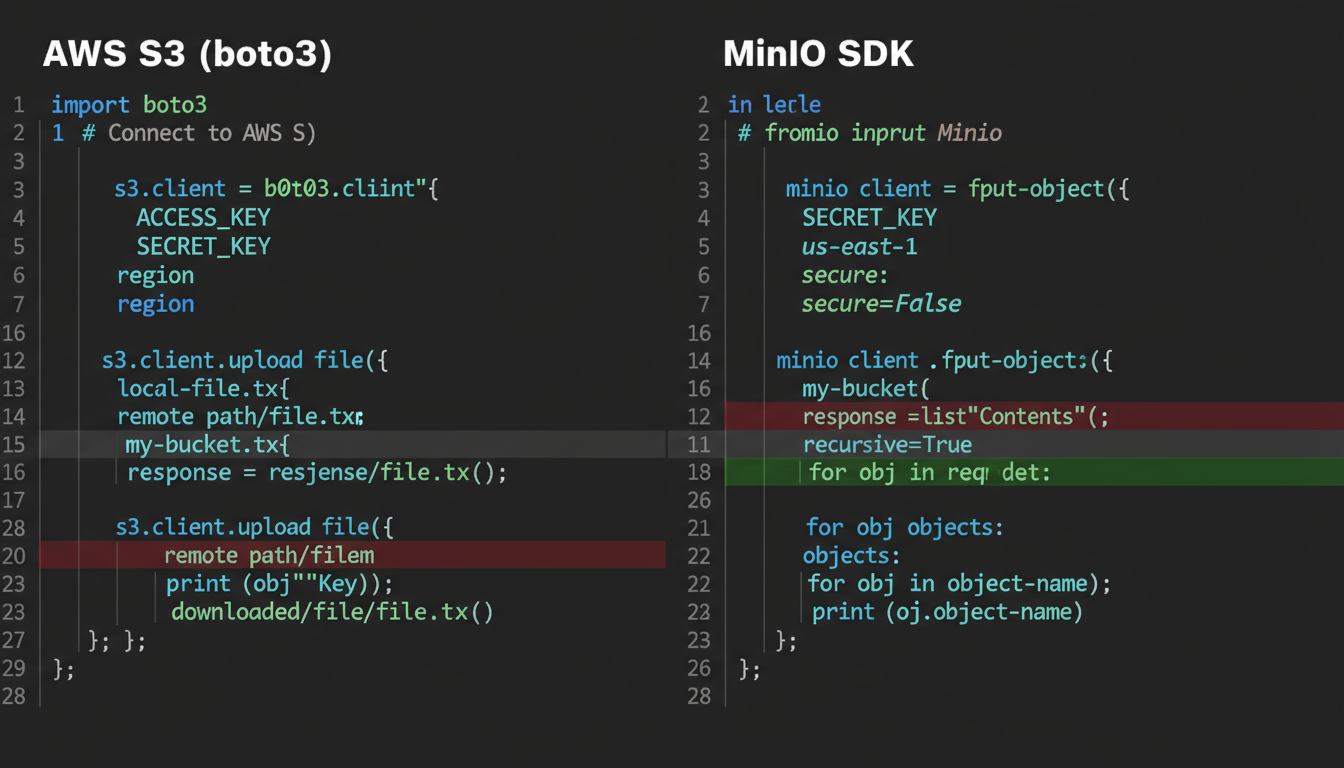

# Before: AWS S3

s3 = boto3.client('s3', region_name='us-east-1')

# After: MinIO

minio_client = Minio(

'minio.example.com:9000',

access_key=access_key,

secret_key=secret_key,

secure=True

)The changes were surprisingly minimal thanks to MinIO's S3 compatibility. We updated endpoints, adjusted error handling, and preserved functionality through a compatibility layer.

Rigorous Testing: Why We Had Zero User Reports

We validated 40+ storage-dependent endpoints through a comprehensive testing strategy:

- Unit tests for upload/download logic

- Integration tests with MinIO mock server

- Canary testing with shadow traffic

- 48-hour production smoke tests

The result? Despite the complexity, we had zero user-reported issues post-migration.

Key Takeaways for Your Cloud Strategy

This migration taught us valuable lessons that extend beyond cost savings:

1. Open-source alternatives can match enterprise solutions when chosen wisely

2. Incremental execution turns overwhelming projects into manageable wins

3. Abstraction layers (like NGINX) enable seamless infrastructure transitions

4. Proper planning and testing make complex migrations boring—in the best way possible

The outcome speaks for itself: $1,200 annual savings, enhanced data sovereignty, and infrastructure that scales on our terms. Most importantly, we proved that with careful planning, even major infrastructure changes can be executed smoothly.

The project itself, you can read on this project

Jonathan

Content creator and developer.

Related Posts

Building My Developer Portfolio: Why I Chose Custom CMS Over WordPress

How I built a custom portfolio with Next.js and a self-made text editor to stand out in the competitive web development market. Learn my technical choices and personal branding strategy.

Beyond Fingerprints: How I Architected a Scalable Face Recognition Attendance System

Faced with server overload? Discover how I, as a Full-Stack Developer, solved this by implementing a privacy-first, client-side face recognition system using Flutter & TensorFlow instead of traditional server processing.